A/B testing and hypothesis-driven development (HDD) are two powerful methodologies for product teams aiming to make data-backed decisions. Together, they allow teams to experiment, measure, and iteratively improve their products by validating user behaviors and needs with real-world data.

Let’s dive into how A/B testing and HDD can transform your product development process.

What Is A/B Testing?

A/B testing is an experimental approach where two versions of a product (or feature) are presented to different segments of users to determine which one performs better. By splitting traffic between version A and version B, product teams can directly measure user preferences and improve features, layouts, or calls-to-action (CTAs).

For example, imagine testing two versions of a sign-up form. Version A has a long-form while version B has a short form. By testing both, you can identify which version leads to higher sign-ups and conversion rates.

What Is Hypothesis-Driven Development?

Hypothesis-driven development (HDD) is an iterative approach where teams create hypotheses about user behavior, test them, and adjust product development based on what’s learned. It contrasts with assumption-based development, which relies on subjective insights or guesswork.

For instance, a hypothesis might state, "Changing the color of the 'Buy Now' button will increase the number of clicks." Teams then run experiments to validate this hypothesis through A/B testing, creating a structured path for product improvement.

How A/B Testing and HDD Work Together

At the heart of both A/B testing and HDD is experimentation. A well-formed hypothesis can be tested using A/B testing, providing objective, measurable results to guide product development decisions. This combination allows for continuous improvement by verifying changes with actual user behavior.

Best Practices for Hypothesis-Driven A/B Testing

- Start with Clear Hypotheses: For every test, start with a clear, measurable hypothesis (e.g., "Simplifying the checkout process will reduce cart abandonment").

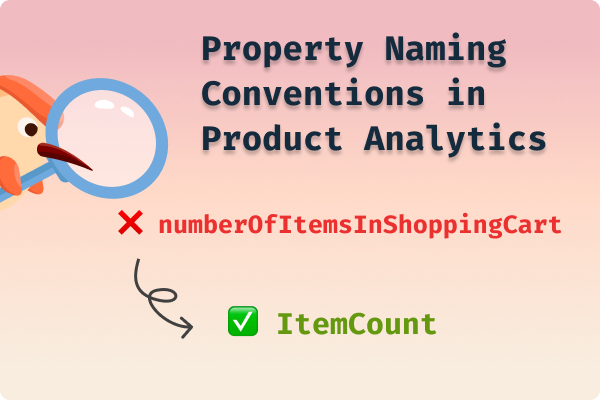

- Choose Meaningful Metrics: Select relevant metrics that reflect user behavior, such as conversion rate or time on page.

- Limit Variables: Test one element at a time (e.g., button color, form length) to isolate the effects and provide clear results.

- Test with Enough Sample Size: Ensure your test has enough participants to draw statistically significant conclusions.

- Analyze Results Objectively: Avoid bias by focusing on the data and statistical significance.

Common Pitfalls to Avoid

- Testing Too Many Variables: Focus on one variable at a time to avoid confusion in your results.

- Jumping to Conclusions: Don’t rush to act on results before reaching statistical significance.

- Ignoring Context: Always consider the broader context, including user demographics and behavior, when analyzing your data.

Tools for A/B Testing and Hypothesis Development

Popular tools for A/B testing include Optimizely, Google Optimize, and VWO. For managing hypotheses and results, consider using Jira, Trello, or Miro to track ideas, tests, and outcomes.

Case Study: A/B Testing and HDD in Action

In one case, an e-commerce company hypothesized that simplifying the checkout process would increase purchases. By testing a simplified checkout flow (Version A) against the current flow (Version B), they identified a 15% increase in conversion rates. This validated their hypothesis, leading to a permanent change that improved the bottom line.

Conclusion

Combining A/B testing with hypothesis-driven development enables product teams to take the guesswork out of decision-making. By validating hypotheses through data-backed experiments, teams can confidently improve products and user experiences. Embrace these methods to ensure that every change you make is driven by real user behavior and measurable results.

.svg)

.png)

.svg)