User interviews are a common and powerful tool for improving your product’s UX.

Before you can run them effectively, though, you need to plan ahead a little bit.

Below is all the information you need for planning and conducting great user interviews, from choosing the right format to asking the best questions.

In the end, you can also read about our personal experiences and how we went about doing user interviews, what we changed over time, and what we learned along the way.

What Are User Interviews?

User interviews are (typically) one-on-one discussions with a user to gather feedback relating to your product or product idea. They usually last 30-60 minutes.

They’re a popular method of UX research. The point is to gain a deeper understanding of users’ attitudes, pain points, behaviors, needs and motivations with your product. This will, in turn, allow you to make a better solution that people are happy using and will continue to use.

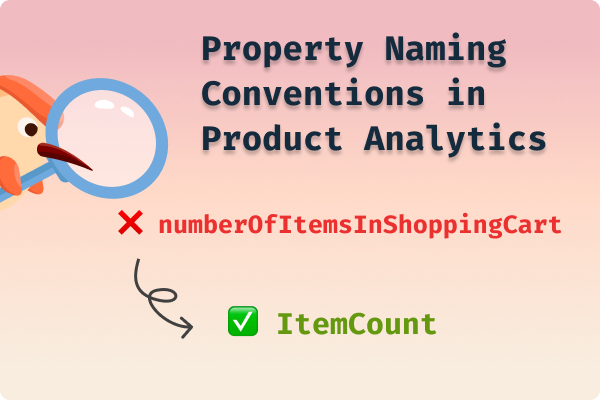

It’s hard to gather all this insight from numbers and product analytics alone. Interviewing users personally brings more nuance to the table.

Choosing the Interview Type

There are quite many ways to categorize user interviews into different types: evaluative vs. generative, quantitative vs. qualitative, and so on. Another popular division is structured vs. unstructured vs. semi-structured (as you’ll see below).

To make things simpler, we’ve combined all the most relevant information below for choosing what kind of user interview you want to conduct.

Simply choose a method, frequency and location, and you’re good to go.

For context, the most common type of user interview is probably semi-structured, one-time and remote.

(1) Method

User interviews vary in structure and flexibility, offering different approaches to gathering user feedback depending on the research goals and context. These methods range from rigid, predefined formats to completely open-ended conversations.

Structured

Structured interviews follow a strict script with pre-planned questions asked in a specific order. They’re designed for consistency, making it easier to compare responses across participants. While they often include closed-ended questions (e.g., “yes or no”), they can also feature open-ended ones as long as they adhere to the script.

This method is ideal for evaluative research, such as validating specific features or hypotheses. However, it limits flexibility and is less effective for exploring new ideas. Structured interviews often take the form of surveys or usability tests.

Unstructured

Unstructured interviews are the most flexible and open-ended method, often resembling a free-flowing conversation. The interviewer may start with a general topic or objective but does not rely on a formal script. Instead, they allow the participant to guide the discussion based on their thoughts, experiences, and priorities.

This method is best for generative research, where the goal is to uncover new ideas or understand a user’s context. For example, starting with, “Tell me about your workflow,” can lead to unexpected insights. While rich in detail, unstructured interviews are harder to standardize and compare across participants.

Unstructured interviews are particularly useful in the early stages of product development, where the goal is to gain an in-depth understanding of user needs and experiences rather than validate specific ideas.

Semi-Structured

Semi-structured interviews combine the consistency of structured methods with the flexibility of unstructured ones. They begin with a set of predefined questions but allow for deviations to explore interesting responses.

This approach works well for both generative and evaluative research, making it the most popular method. For instance, you can ask, “What problem were you trying to solve with our product?” and then adapt follow-up questions based on the participant’s answers. Semi-structured interviews provide both comparability and the opportunity to dig deeper into user behaviors and attitudes.

This format is particularly valuable for qualitative research, where understanding the "why" behind user behaviors and attitudes is key.

(2) Frequency

Once

One-time interviews are conducted as a single session, typically for a specific research objective. These are ideal when you need feedback on a particular feature, design, or idea without the need for follow-up.

For instance, you might conduct a one-time interview to understand user reactions to a prototype, asking questions like, "What do you think of this layout?" or "What challenges did you face while completing this task?" This type of interview provides a snapshot of user feedback, helping inform immediate design or product decisions.

Multiple times

You might want to have a follow-up meeting with the same interviewees some weeks or months after the initial meeting, for example if you’ve taught them about a new feature and now want to see how they’ve adopted it in their workflow.

Before your first meeting, you don’t have to know if there’s going to be a second one. If everything goes smoothly and the interviewee seems open to it, you can suggest a follow-up meeting after the initial one when you think it’s time.

Optionally, you can conduct rolling continuous interviews on a regular basis.

These are especially useful for agile product development or iterative design processes, where ongoing feedback is crucial for refining the product.

(3) Location

Lastly, you’ll have to decide whether your interviews will be in person or online.

It’s much easier to set up online interviews since there’s no need for commuting. Being remote also allows you to be more flexible with interviewing people all around the world.

However, in some cases it might be worth all the hassle to meet up face to face. For example, reading nuances in body language and showing prototypes is usually easier this way.

What to Do Before the Interview

Prepare Your Questions and Materials

Before the interview, draft your questions on your laptop or on paper with the interview goal in mind. Start with broader, open-ended questions to help the conversation flow and include more specific follow-ups to dig deeper into particular areas. Be sure to have extra questions ready in case the conversation takes an unexpected turn.

It can also be helpful to find out some basic information about the interviewee beforehand. This might include their company, how long they’ve been using your product, or any previous feedback they’ve provided.

Set Expectations

Let the participant know in advance that the session will be recorded (if applicable), and provide them with the option to request not to be recorded if they’re uncomfortable.

If you’re offering a reward, such as a gift card, confirm this with the participant ahead of time so they know what to expect.

Check out how we managed to get participants for our user interviews and tips for outreach in this blog:

How to Run the Interview

Below is a checklist for different things to remember during the interview. Note that the tips are mainly meant for semi-structured interviews.

Opening the Meeting

- Engage in small talk: A nice way to ease into the conversation is to ask the other person about their day or where they’re based, and share similar information about yourself.

- Thank them for their time: A simple acknowledgment goes a long way in making participants feel valued.

- Introduce yourself: Share your name, role, and possibly something else about the company or project to establish more context.

- Explain the goal and context of the meeting: Let them know why their input is important and emphasize there are no right or wrong answers.

- Remind them of the reward: Confirm what they’ll receive after the meeting, such as a gift card, and thank them again for participating.

Asking Questions

Begin with open-ended questions to gauge overall attitudes and behaviors.

For example:

- “Can you tell me a bit about your role?”

- “What made you decide to try our product?”

- “How does our product fit into your workflow?”

Dig deeper with more specific follow-up questions such as:

- “What’s the most useful feature of the product for you?”

- “What specifically made that feature helpful for you?”

- “Can you give me an example of when that happened?”

It’s important to get an understanding of the user’s context first with broader queries because they might reveal a use case you didn’t expect. You can’t assume every user shares the same baseline. You might need to adjust your follow-ups according to the answers you get in the beginning.

General Advice

- Avoid hypotheticals: Focus on past behavior rather than speculative answers. Real experiences provide more reliable insights than hypothetical wishes. For instance, instead of asking, “What would you do if we added this feature?” ask, “How did you handle this situation last time?”

- Be curious, not judgmental: Don’t hesitate to ask for clarification or to play coy by asking about seemingly obvious things—this often reveals surprising insights.

- Treat your planned questions as a guide: It’s okay to explore tangents if the participant shares something particularly interesting.

- Get comfortable with silence: If a participant seems hesitant, wait a few seconds to give them space to think. Often, they’ll fill the silence with valuable insights.

- Keep track of time to ensure you cover the most critical questions: Cut someone off gently if they’re going off-topic or repeating points, but embrace improvised conversations if they reveal unexpected insights relevant to your goals.

- Pay attention to non-verbal cues, such as tone, pauses, or expressions. These can be harder to detect in remote settings, so check the webcam and listen closely.

- Have a dedicated note-taker like Wudpecker or record the session for later review.

Wrapping It Up

As the session is coming to an end:

- Preface your final questions: Say something like, “We’re nearing the end of our time, but I have just one or two more questions.”

- Ask for additional insights: End with, “Do you have any questions for us or other feedback?” This gives participants a chance to share valuable thoughts that might not have come up during structured questions.

- Thank the participant sincerely: Show gratitude for their time and input.

- Confirm next steps: Let them know when they can expect their reward or if there will be any follow-up.

Tips for Conducting Remote User Interviews

- Send clear instructions ahead of time: Provide details on how to join the session, including links, passwords, or technical requirements.

- Test your systems: Check your audio, video, and screen-sharing tools before the meeting to avoid technical issues.

- Look into the camera: This creates a sense of connection and makes the participant feel more engaged.

- Handle late attendees efficiently: If a participant is late, use tools like Google Calendar to send a quick message directly from the meeting invite, letting them know you’re already in the meeting room and waiting for them to join. This avoids confusion and maintains professionalism.

What to Avoid

To get the most valuable insights from your user interviews, it’s important to steer clear of certain pitfalls that can skew the conversation or limit the depth of responses. Here are key things to avoid:

(1) Using Vague Questions Without Context

Vague questions can leave participants unsure how to answer. Provide context or examples to clarify what you’re asking.

Instead of...

“What do you think about this?”,

ask:

“What do you think about how this feature fits into your daily workflow?”

Adding specifics makes it easier for participants to give actionable feedback.

(2) Leading and Assumptive Questions

Avoid framing questions in a way that pushes the participant toward a specific answer. For example, instead of asking,

“You find this feature helpful, right?”

say,

“How do you feel about this feature?”

Leading questions can bias the feedback and make it less reliable.

(3) Asking Many Questions in One

When you combine multiple questions into a single query, participants can get confused or overwhelmed. For instance, instead of asking, “Do you think this feature is easy to use and would you recommend it to others?” break it into two clear questions:

- “How easy is this feature to use?”

- “Would you recommend it to others? Why or why not?”

(4) Asking for Ideas or Improvements

While it’s tempting to ask users how to improve your product, this can lead to unhelpful suggestions or solutions that don’t align with your goals. Focus on understanding what the participant is trying to accomplish and their current workflows. For instance, ask:

- “What are you trying to achieve with this tool?”

- “Are there other tools you’re using to solve this problem? How do they compare to ours?”

(5) Yes/No Questions

In semi-structured and unstructured user interviews, avoid simple yes/no questions, as they rarely provide meaningful insights. Instead, ask open-ended questions that encourage participants to elaborate.

For example:

- Instead of: “Do you like this feature?”

- Ask: “What do you like or dislike about this feature?”

(6) Blindly Accepting All Answers at Face Value

Even with non-leading, well-structured questions, some users might unintentionally tailor their answers to please you or, in rare cases, provide answers they think will end the interview faster.

If a response seems suspicious or overly vague, gently probe further to clarify or validate the claim. For example, if a user says, “I use this feature all the time,” you might ask follow-up questions like:

- “Can you walk me through a recent time you used it?”

- “What specifically do you find most helpful about it?”

- “What made you choose our feature over alternatives?”

How We Conducted User Interviews

There were two phases to conducting the user interviews. First, we wanted to understand the broad strokes of how people use Wudpecker. Then, we explored more specific user experiences on a deeper level.

Generally, our meetings followed this basic structure:

- General level – understanding broad usage patterns

- Deeper insights – exploring pain points and needs in detail

- Validation – getting perspective on our product decisions

However, depending on the phase, the specific questions we asked varied.

Phase 1: Scoping Out How Wudpecker Is Being Used

In this phase, we focused on getting a broad overview of how users were interacting with Wudpecker across different contexts.

(P.S. If you didn’t know before, Wudpecker is an AI notetaker tool)

Example Questions:

- General level: What kind of meetings do you use Wudpecker for?

- Deeper insights: In those meeting notes, what insights are most valuable to you? Can you give an example?

- Validation: Are the summaries we provide detailed and accurate enough? Have you had to edit them?

This phase was crucial for establishing a baseline understanding of Wudpecker’s usage. It helped us identify common patterns across different types of users and their pain points.

We knew we had reached a saturation point when the feedback from users became repetitive and predictable. At this point, we had gathered enough data about the typical ways people were using Wudpecker and their feedback.

Now we could move on to a different approach of interviewing.

Phase 2: Improvising and Digging Deeper into Individual Use Cases

Once we had the general landscape mapped out, the second phase of our interviews focused on uncovering deeper insights by allowing for more improvisation and flexibility.

Instead of sticking to a rigid script, we tailored each conversation to the user's specific context and the feature we wanted to explore.

We could pinpoint specific issues with features and onboarding processes that we hadn’t fully understood in the first phase.

How This Phase Was Different

- Greater flexibility: Instead of rigidly sticking to a preset list of questions, we allowed the conversation to flow naturally based on the user's responses. This approach helped us uncover more detailed insights into specific use cases.

- Going beyond surface-level questions: Previously, we didn’t have time to get into the reasons a user might, for example, copy parts of their Wudpecker notes to a CRM tool. Now we did, and we also tried to understand the reasons behind such behaviors. What does the other tool offer that we don’t? Is it reasonable to imagine Wudpecker one day providing that same feature and eliminate the need to use the other tool?

Example Questions

- General level: What were your first impressions of the new Collections feature?

- Deeper insights: Can you walk us through exactly how you tried to use the feature and why?

- Validating: Did the onboarding process for this feature feel clear or confusing? How could it be improved?

💡 Lessons Learned

1) Change Strategy When You’re Not Learning Anything New

Initially, we focused on understanding general usage patterns, but once we had enough high-level insights, we dug deeper into specific user experiences and pain points. This shift ensured we weren’t just gathering surface-level information but also more detailed, actionable insights.

2) Concrete Examples Are Invaluable

Encouraging users to share their screens or walk you through their exact process with examples provides valuable context for understanding their interaction with the product.

Some users may be hesitant to share too much detail, so it’s important to reassure them that they don’t need to disclose anything confidential. If they’re still uncomfortable, it’s fine to leave it at that—no need to push further. However, it’s always worth asking, as these real-world examples are the most effective way to understand what the user is trying to communicate.

3) Expect the Unexpected

Conversations don’t always go as planned, and that’s perfectly fine. While it’s important to let the conversation flow naturally, it’s equally crucial to know when to gently guide it back on track. Flexibility is key—sometimes tangents reveal the most important information, but other times it’s more effective to refocus on your main questions. Keep your core objectives in mind, or you risk getting sidetracked by unexpected detours.

Conclusion

You learn best by doing. The more user interviews you have under your belt, the more comfortable you become organizing and conducting them.

However, it’s always a good idea to learn from others’ experiences and mistakes and come somewhat prepared. In this blog, we covered the essentials you need to know about planning and running user interviews.

Don’t forget to also stay curious and open to the unexpected insights that can shape your product’s future.

Your users—and your product—will thank you!

.png)

.svg)

.png)

.svg)